New Meta AI app provides a Llama 4-powered challenge to Gemini

[ad_1]

What you need to know

- The Meta AI app is a repurposed version of the Meta View app that no longer focuses solely on Ray-Ban Meta smart glasses.

- Now, anyone with a Facebook or Meta account will be able to query the Llama 4 model using the Meta AI app.

- Ray-Ban glasses owners will still control their devices through the Meta AI app, but it’ll add new smarts like image generation and more conversational responses.

- It’s rolling out to Android and iOS users on April 29, though we’re not seeing the new changes in the current version yet.

The Meta AI app rebrands Meta View, the companion app for Ray-Ban Meta smart glasses, into a more general platform for anyone to get daily AI insights. And once Meta finishes rolling out the update on Tuesday, it’ll be much more than a fresh coat of paint.

In its announcement post, Meta explains that Meta AI lets people “experience a personal AI designed around voice conversations with Meta AI inside a standalone app.” And it’s designed around Llama 4, its smartest AI model released in early April with 17 billion active parameters.

Originally designed to support Ray-Ban Meta glasses, the Meta AI app will continue to enable features like Live Translation and Live AI discussions, except now you can “pick up where you left off” a Ray-Ban discussion on your phone in the History tab.

While the current Meta View / AI app only works with smart glasses, the version rolling out today is meant to incorporate Meta AI directly into the Home tab for anyone, with or without smart glasses.

The Meta AI app now integrates image generation and editing, along with standard Meta AI questions via voice or text. Ray-Ban Meta owners can take a photo and have the Meta AI “add, remove, or change parts of the image.” Users without glasses can still create images to export to social media apps like Instagram.

More generally, the Llama 4-backed Meta AI is supposed to be more “personal and relevant, and more conversational in tone.”

In the U.S. and Canada, Meta AI will allow for “personalized responses.” It will recall past queries or things you tell it to remember, while also taking into account your interests from other Meta apps like Facebook and Instagram.

Another new Meta AI app feature is a Discover feed, where “you can see the best prompts people are sharing, or remix them to make them your own.” You’ll only see prompts that people publicly post, so don’t worry about your private Meta AI queries showing up on someone else’s phone.

Lastly, Meta is promising a “voice demo built with full-duplex speech technology” in the Meta AI app. The gist is that this demo “delivers a more natural voice experience trained on conversational dialogue, so the AI is generating voice directly instead of reading written responses.”

Meta published a white paper about conversational AI last year, describing the benefits of “quick and dynamic turn-taking, overlapping speech, and backchanneling” to create “meaningful and natural spoken dialogue” instead of AI’s typical “half-duplex” method of waiting for the user to stop talking before replying.

The Meta AI full-duplex demo isn’t capable of remembering information yet, and it’s only available in the United States, Canada, Australia, and New Zealand. But it sounds like an intriguing, building rival to the conversation flow of Gemini Live.

In a separate blog post, Meta promised current Ray-Ban Meta smart glasses owners that “the core features you’ve come to know” in the Meta View app “will stay the same,” and your current photos and settings will migrate to the new app without issue.

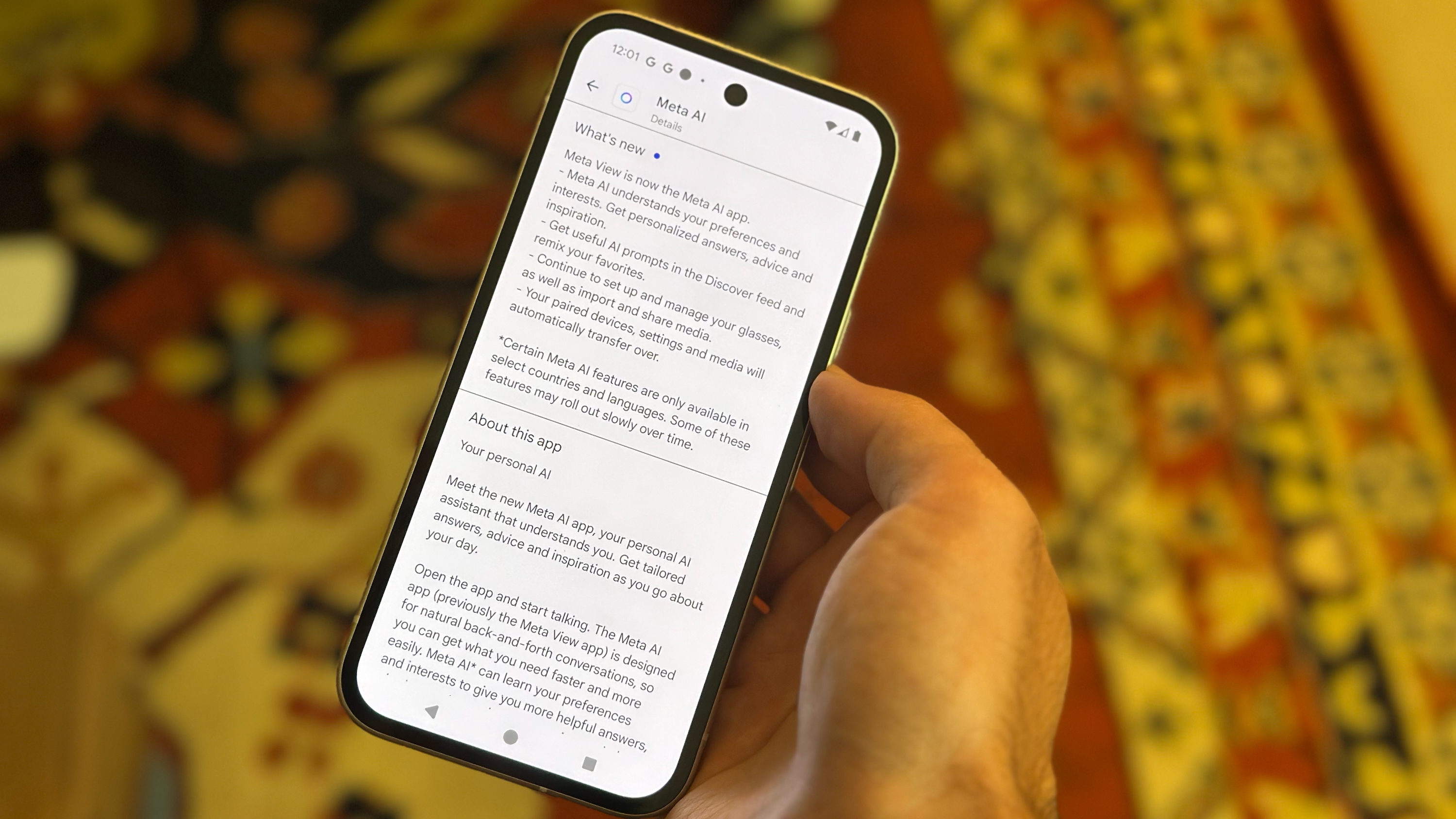

Oddly, while both the Play Store and App Store show the “Meta AI” app in search results, the current updated version doesn’t reflect the changelog and still requires Ray-Ban glasses to do anything.

We assume these changes should finish rolling out soon, and we’re excited to test them. Meta AI and its Llama 4 model will challenge other standalone AI apps like Gemini and ChatGPT.

[ad_2]

Source link