Meta is coming for Google and OpenAI with its fresh Llama 4 models

[ad_1]

What you need to know

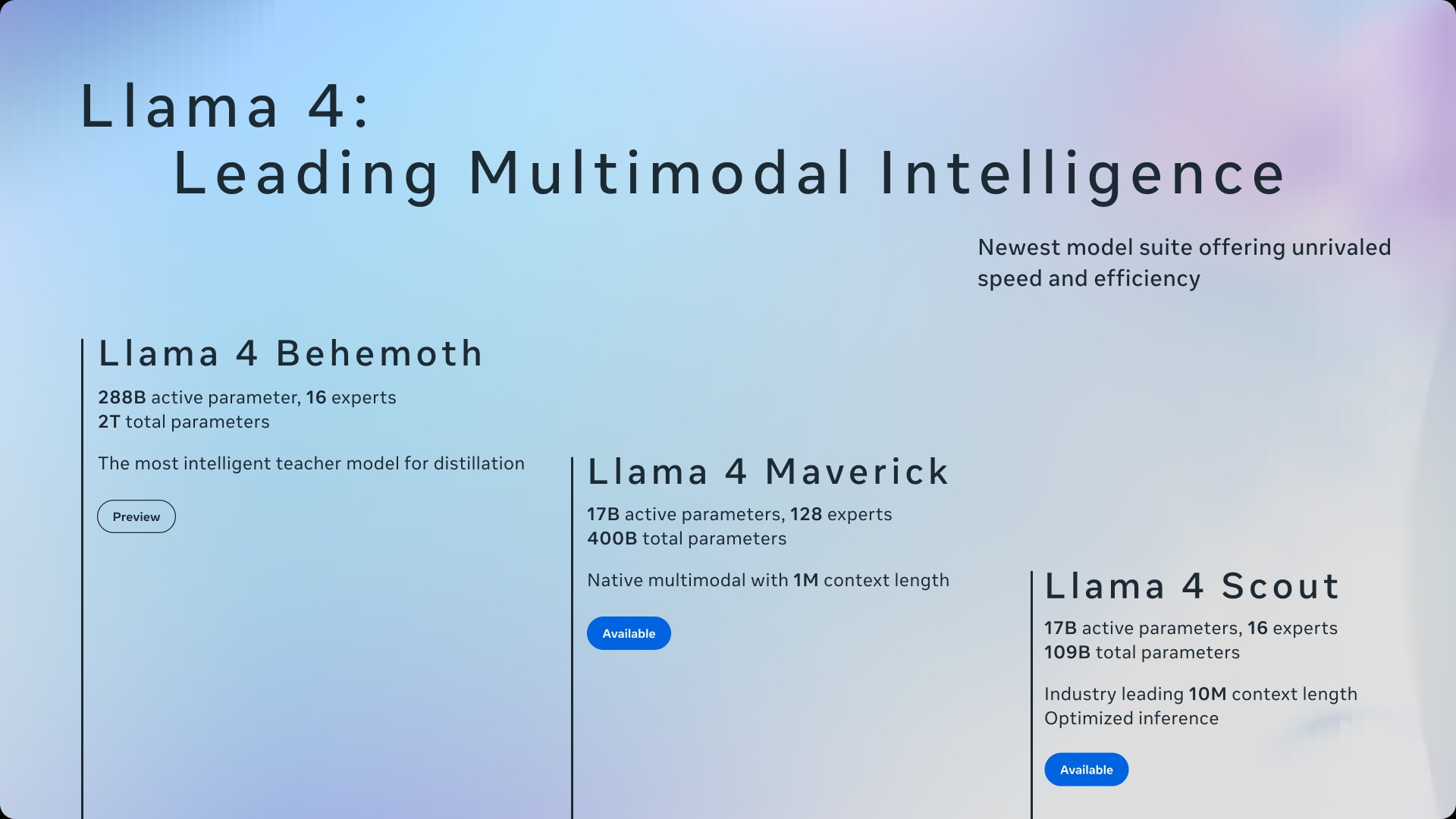

- Meta is rolling out two new models in the Llama 4 series—Scout and Maverick, with early tests showing both outperforming the competition.

- Scout is ideal for tackling huge documents, complex requests, and big codebases, while Maverick handles both text and visuals, perfect for smart assistants and chat interfaces.

- Both models are live on Llama.com and through partners like Hugging Face.

- Meta AI is getting smarter, now available across WhatsApp, Messenger, and Instagram in 40 countries (U.S.-only and English-only for now).

Meta is kicking off its multimodal Llama 4 series with two fresh models: the lean-and-mean Scout and the powerhouse Maverick. The company says these new models are beating the competition pretty much across the board in early tests.

Maverick is the series’ all-around workhorse, built to handle both text and visuals and is ideal for things like smart assistants and chat interfaces. On the flip side, Scout is the lighter, sharper one. It’s the one you want when you’re knee-deep in documents, untangling complex requests, or piecing together logic in massive codebases.

Llama 4 Scout and Maverick are now up for grabs on Llama.com and through Meta’s partners, including Hugging Face. Meta is also baking the new models into its Meta AI assistant, which is already rolling out across apps like WhatsApp, Messenger, and Instagram in 40 countries. That said, the multimodal features are U.S.-only and English-only for the time being.

MoE power

Meta says Llama 4 is a big step forward under the hood. It’s the company’s first time using a Mixture of Experts (MoE) setup. This makes things run smoother and faster, both when training the model and answering your questions. Basically, MoE works by breaking down heavy tasks into smaller chunks and handing them off to specialized mini-networks.

Scout packs 17 billion active parameters spread across 16 expert modules. According to Meta’s specs, this setup outperforms Google’s Gemma 3, Gemini 2.0 Flash-Lite, and the open-source Mistral 3.1 on a bunch of common benchmarks, while keeping a lean profile that lets it run smoothly on just one Nvidia H100 GPU.

Scout shines when it comes to tasks like squeezing down huge amounts of text and making logical calls within big codebases. One standout feature is its massive context window, handling up to 10 million tokens. This means Scout can handle both text and visual data on a massive scale.

Maverick’s strength

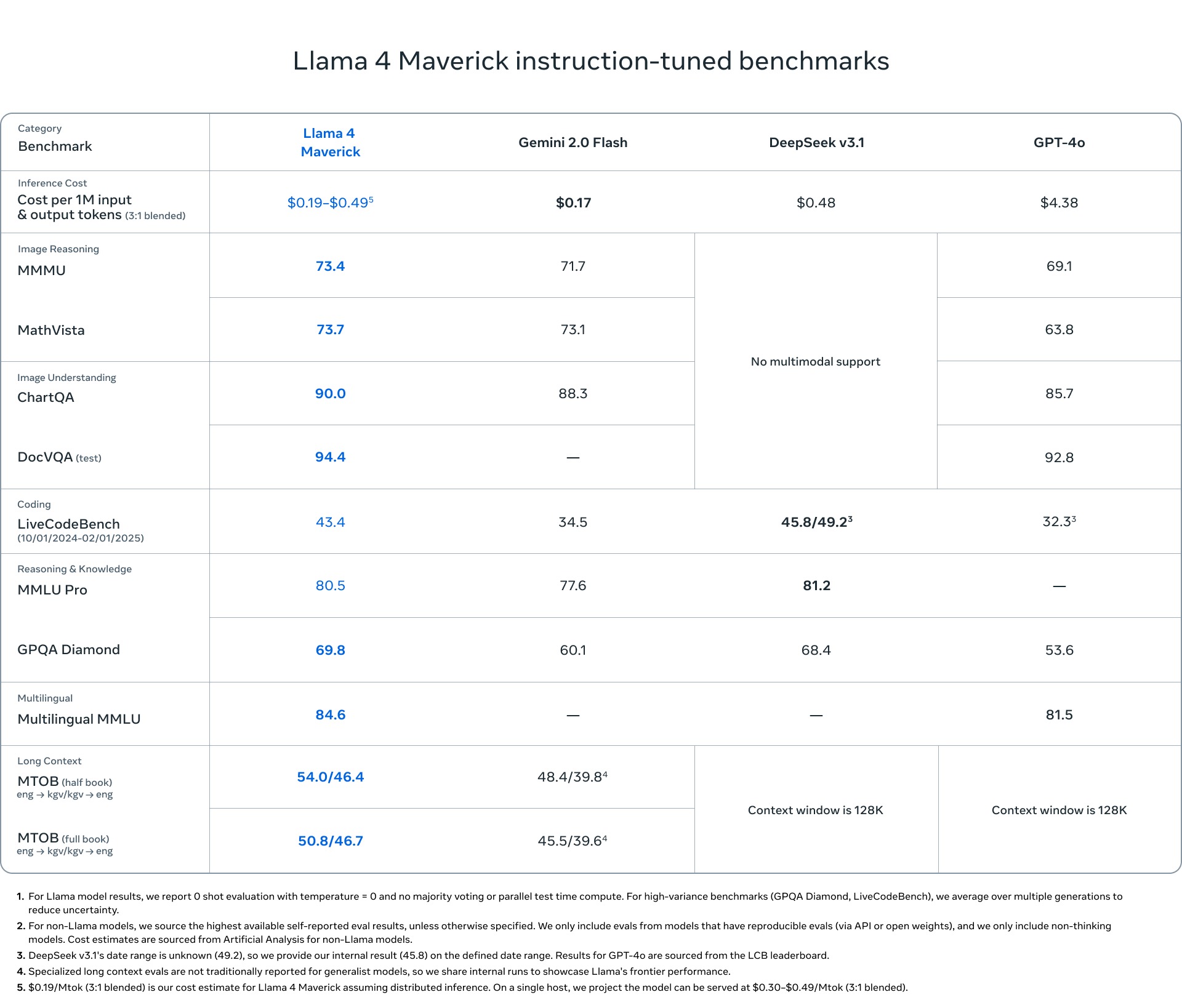

Meta gives Maverick the same accolade, saying it stacks up well against OpenAI’s GPT-4 and Google’s Gemini 2.0 Flash. What’s impressive is that it delivers similar results to DeepSeek-V3 for coding and logic, but with fewer than half the active parameters.

Maverick has 17 billion parameters spread across 128 expert networks. That said, Maverick doesn’t quite match up to Google’s Gemini 2.5 Pro, Anthropic’s Claude 3.7 Sonnet, or OpenAI’s GPT-4.5 when it comes to top-tier performance.

Meta has also teased the Llama 4 Behemoth, which is still in training. When it’s ready, the company expects it to be one of the smartest LLMs out there.

Behemoth packs 288 billion active parameters across 16 experts, with a total parameter count nearing two trillion. Early tests show Behemoth outshines GPT-4.5, Claude 3.7 Sonnet, and Gemini 2.0 Pro in STEM tasks, especially when it comes to solving math problems. However, it still hasn’t surpassed Google’s Gemini 2.5 Pro in overall performance.

Essentially, everything in Meta’s apps that taps into AI is getting smarter, thanks to more advanced logic in the system. This means you’ll see sharper responses, better image generation, and ads that hit closer to the mark.

[ad_2]

Source link